Since my earlier testing of Chatbots on Android years ago, a lot of changes have happened. I’m revisiting the options for an Android phone/tablet user to interact with LLMs now. There are many more options now, ranging from cloud services down to on-device models.

Cloud Services

All of the options in this section are thin apps that simply pass your query up into the cloud. As a positive, this is often the fastest and most featureful appproach. On the negative side, you lose all privacy when conversing with a corporate cloud.

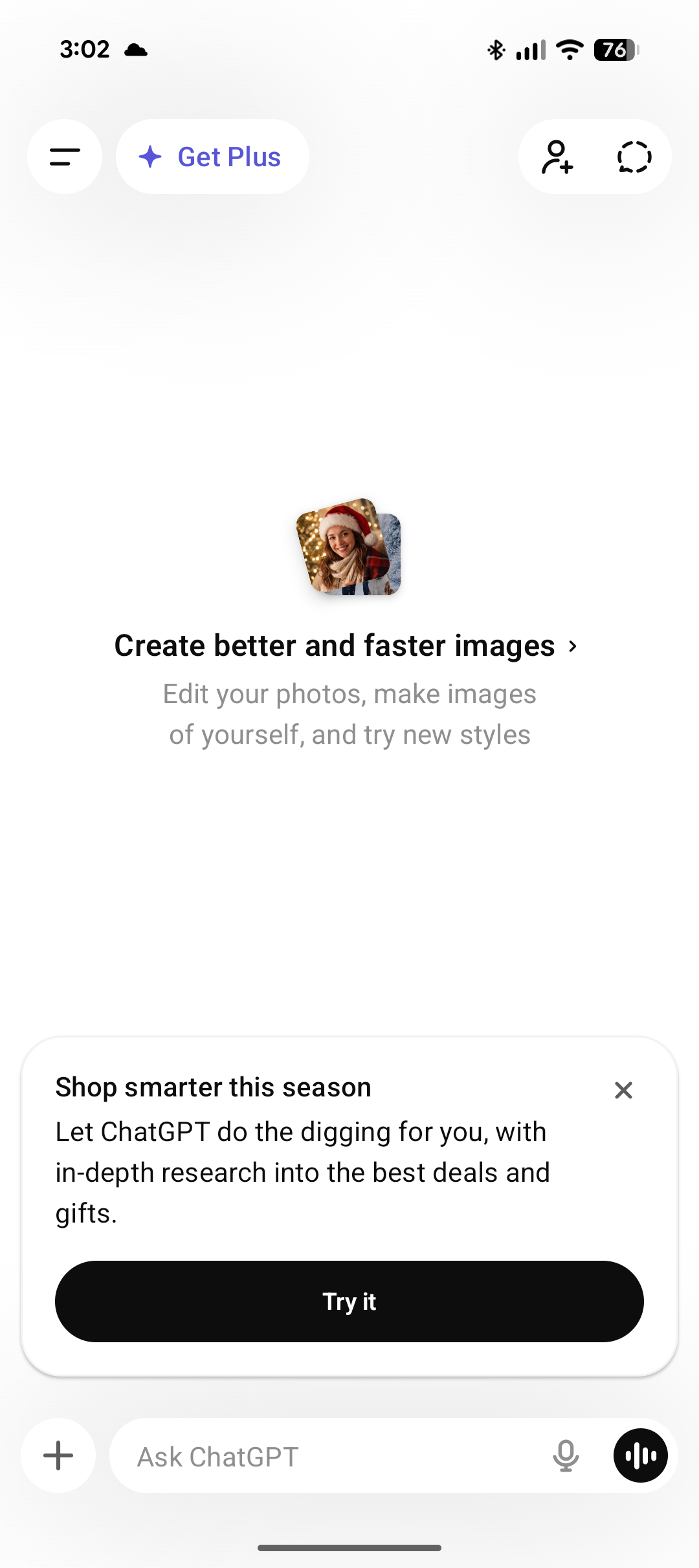

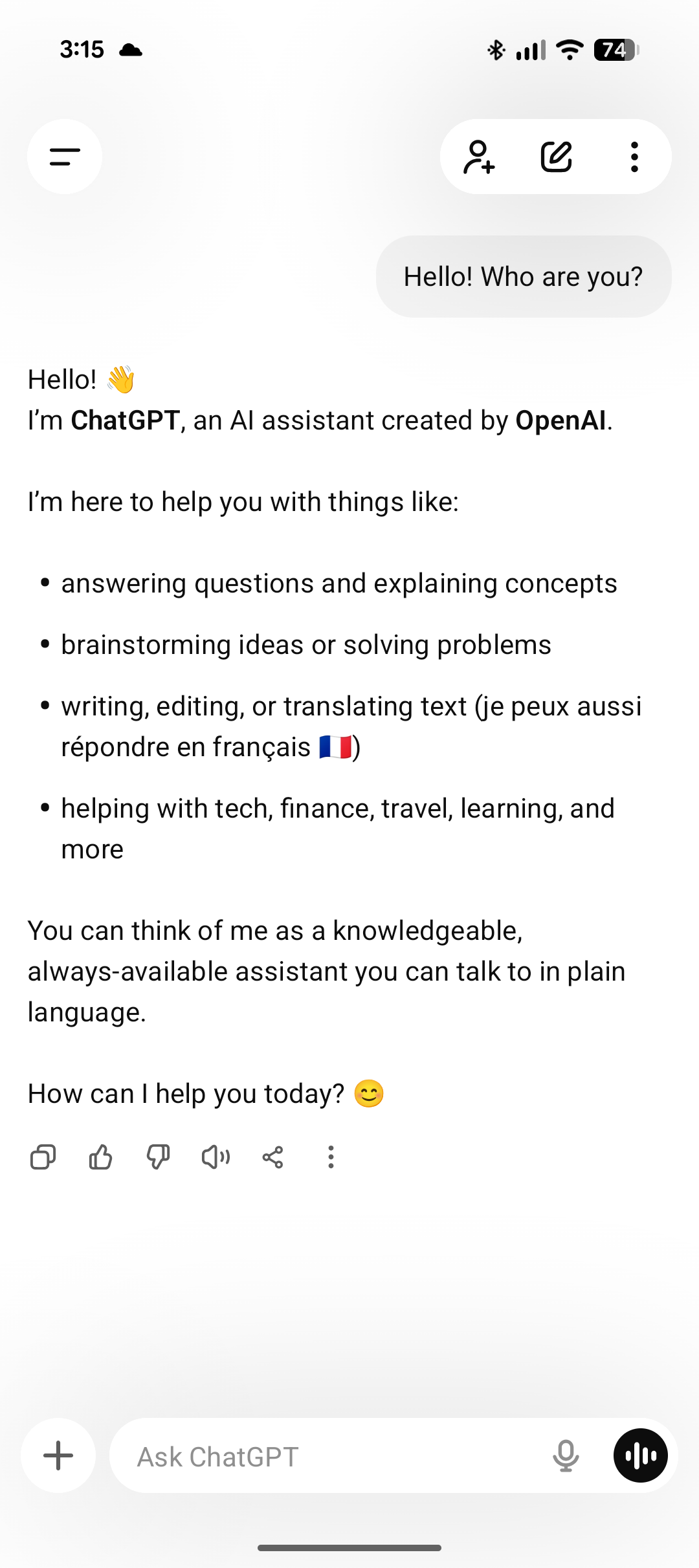

OpenAI ChatGPT

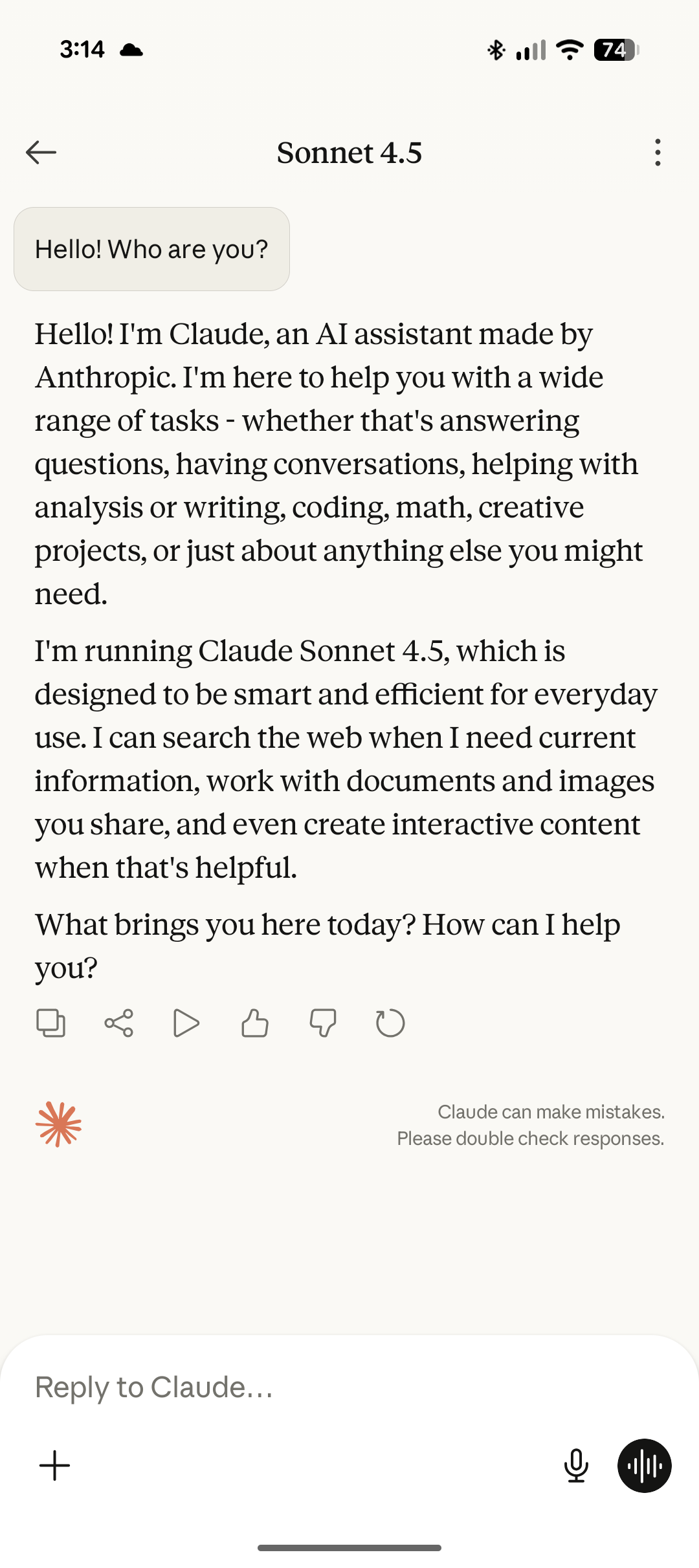

Anthropic Claude

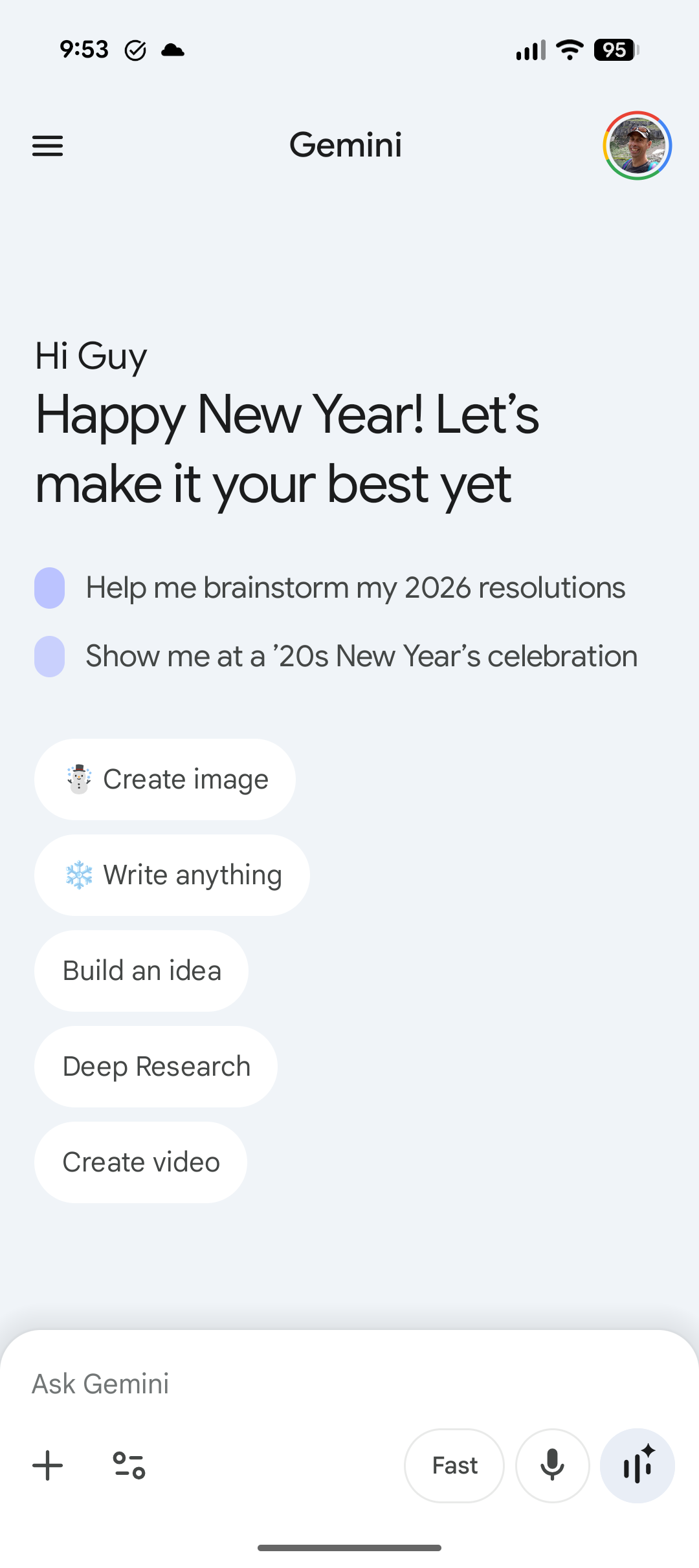

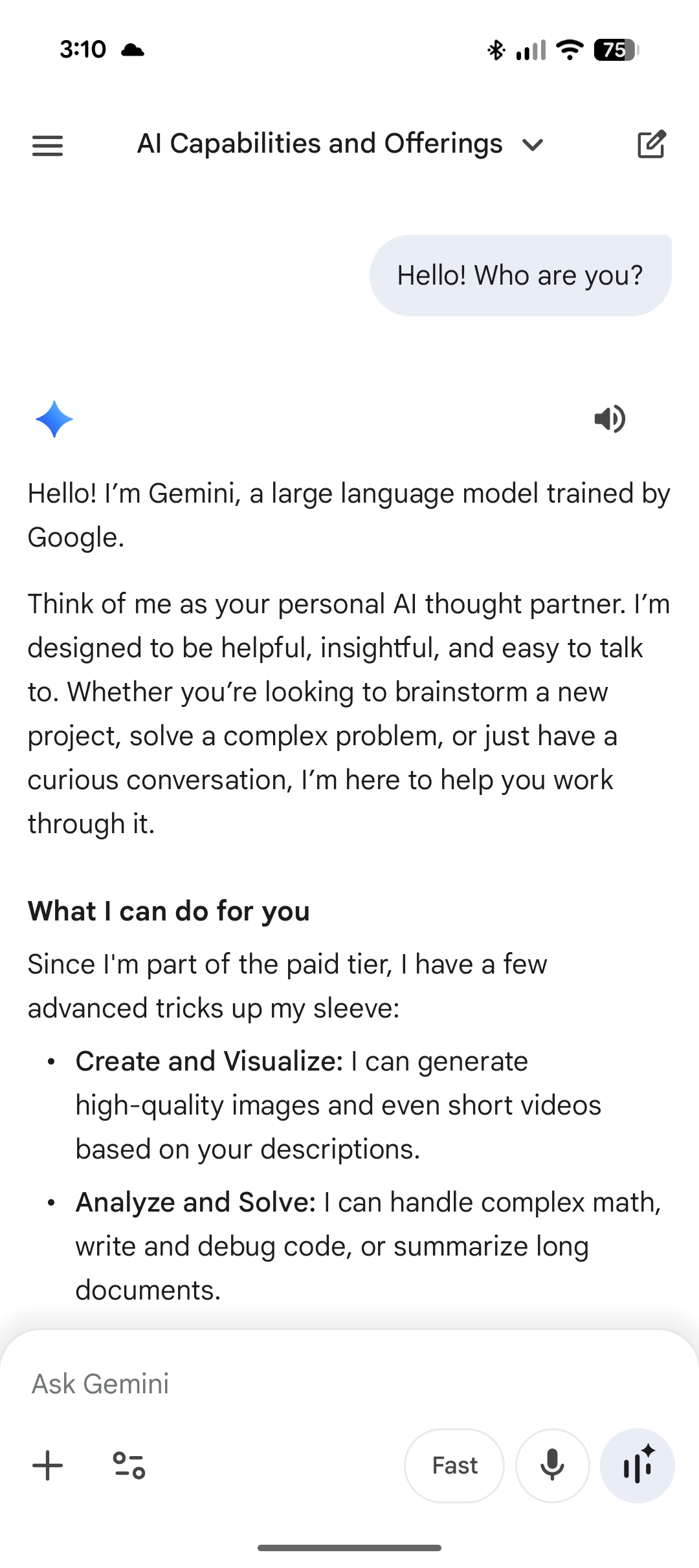

Google Gemini

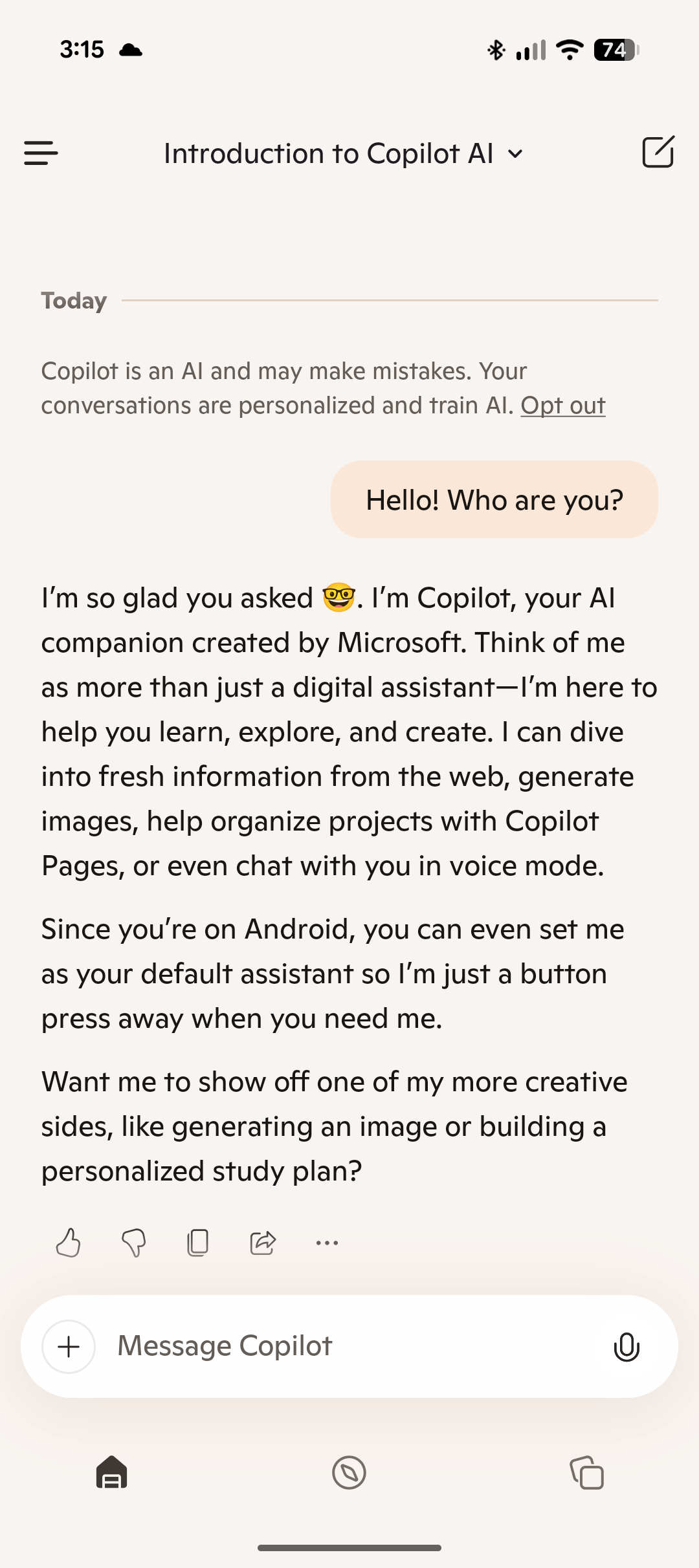

Microsoft Copilot

Mistral AI

Alibaba Qwen

MoonshotAI Kimi

Home LAN LLMs

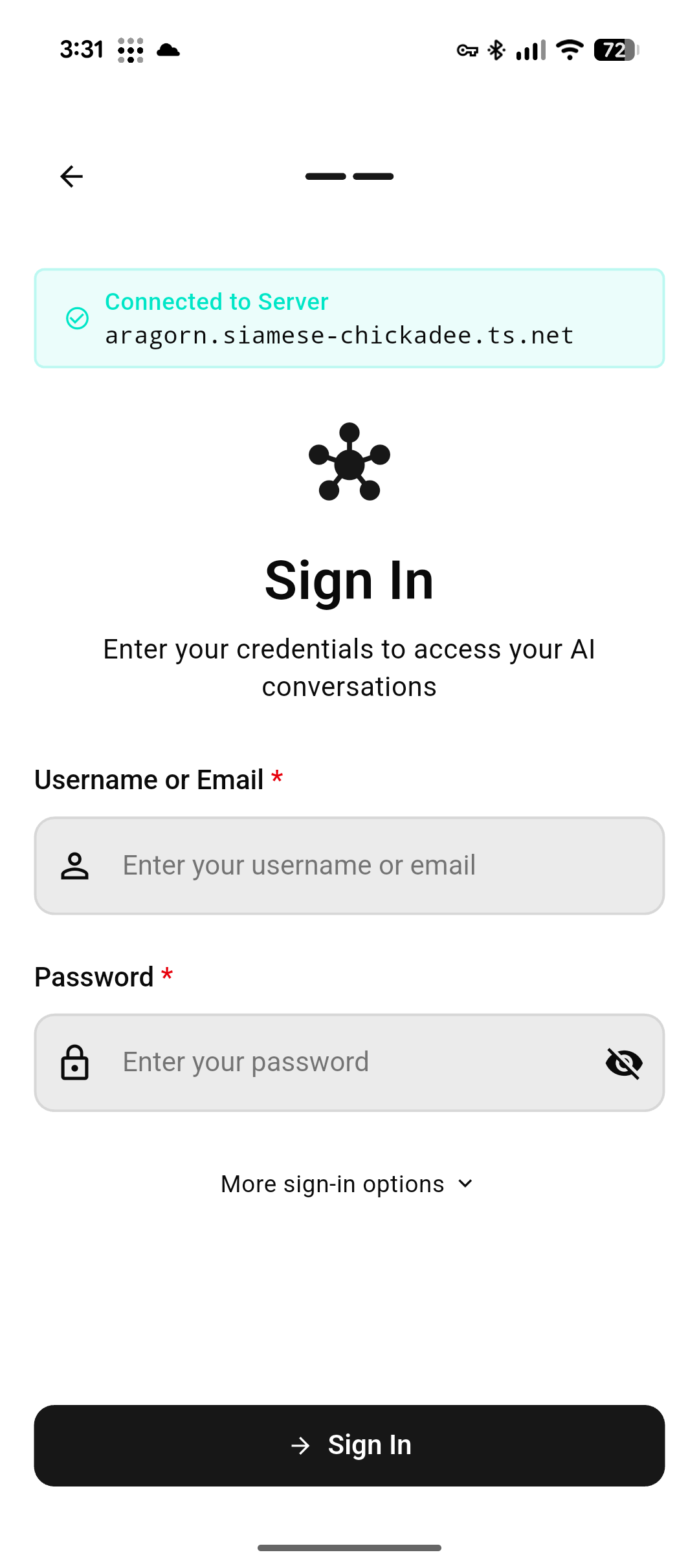

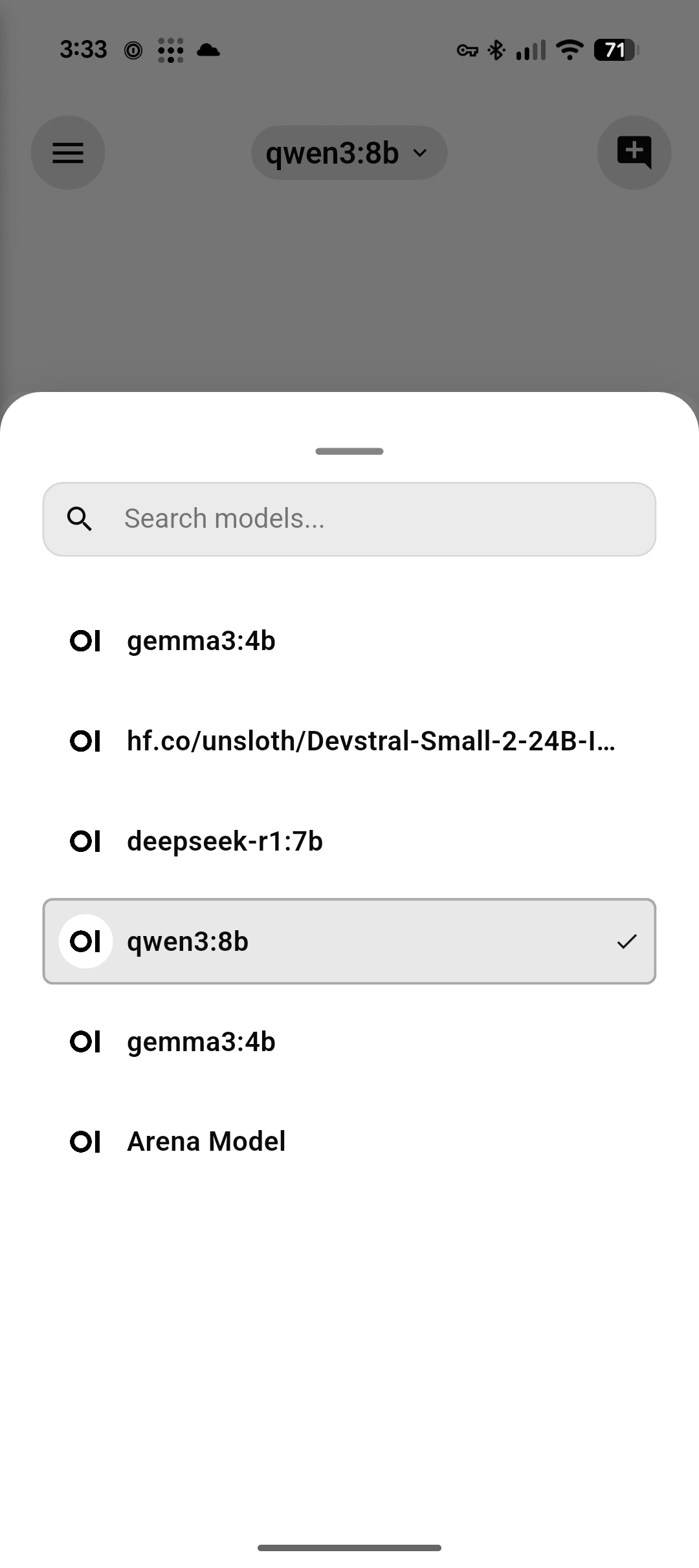

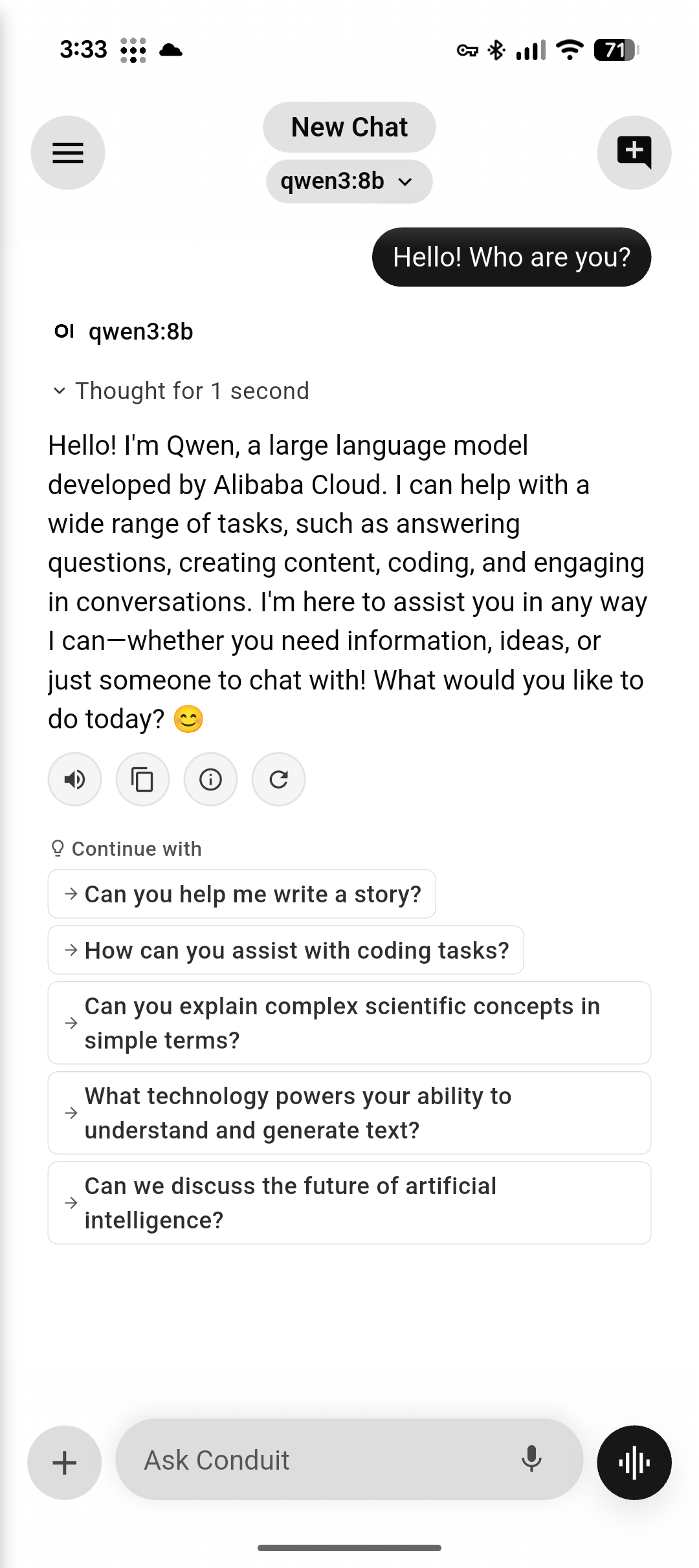

After my deployment of private Gemma, Mistral, and Qwen LLMs on my home LAN, running Ollama on each PC, with a single instance of OpenWebUI fronting them all, I went looking for a mobile phone app to access my home LLMs. I found Conduit, which I connected to OpenWebUI via Tailscale on my Unraid server.

Conduit OpenWebUI

On Device Models

The real future of LLMs will likely be on edge devices themselves as phones/tablets get more powerful hardware. At this point, with my mid-range Google Pixel 9a, local LLMs can be run quite effectively.

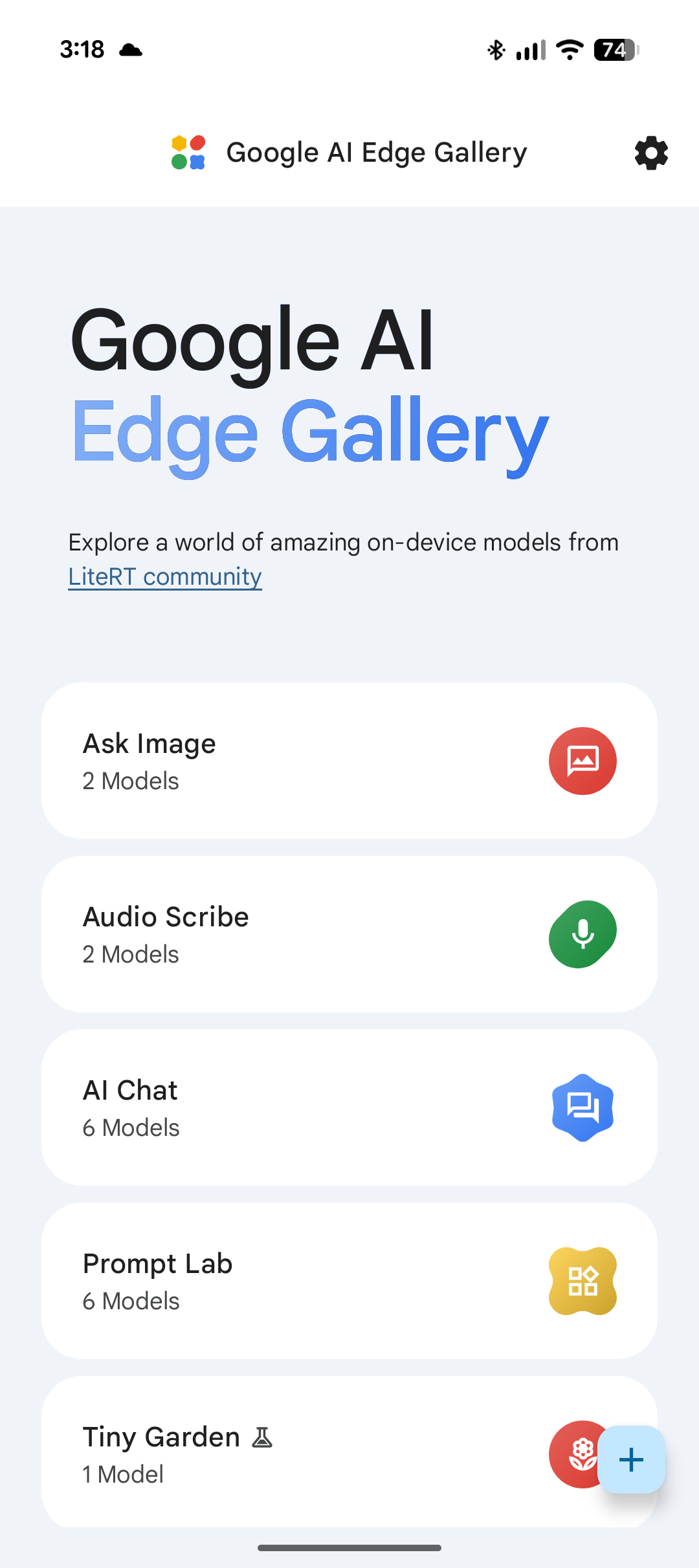

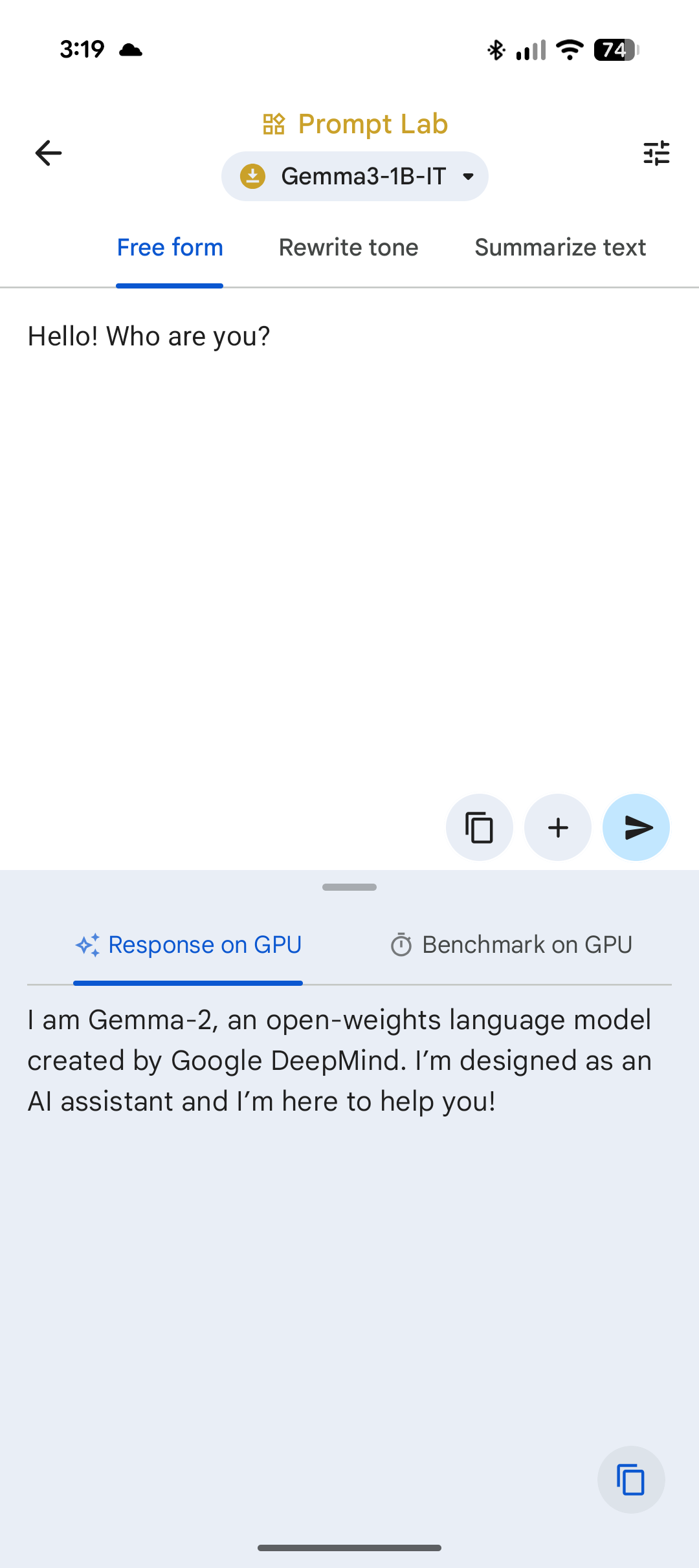

Google Edge

The Edge app from Google is more of a playground demonstration than a real Chatbot app. They are mainly trying to attract developers looking to include AI in their apps, without requiring a network connection or cloud service.

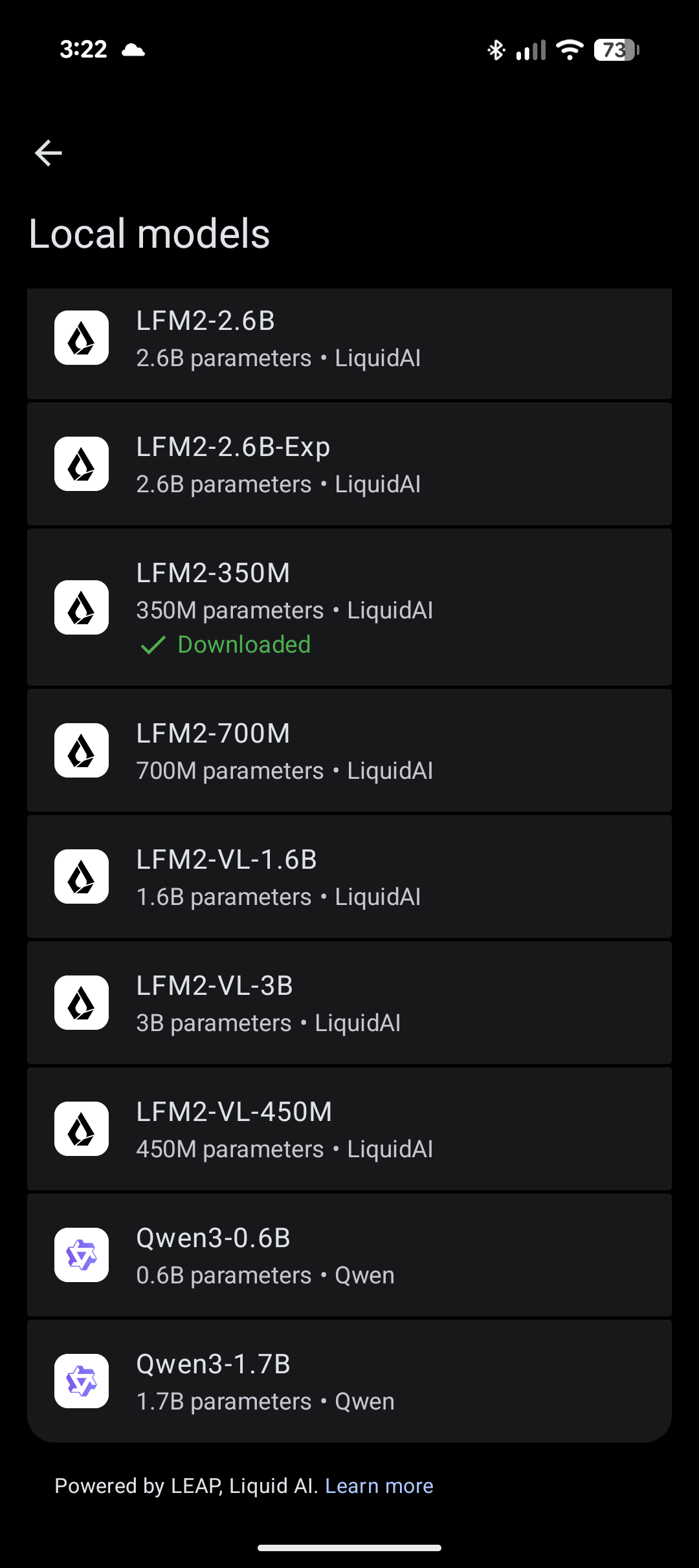

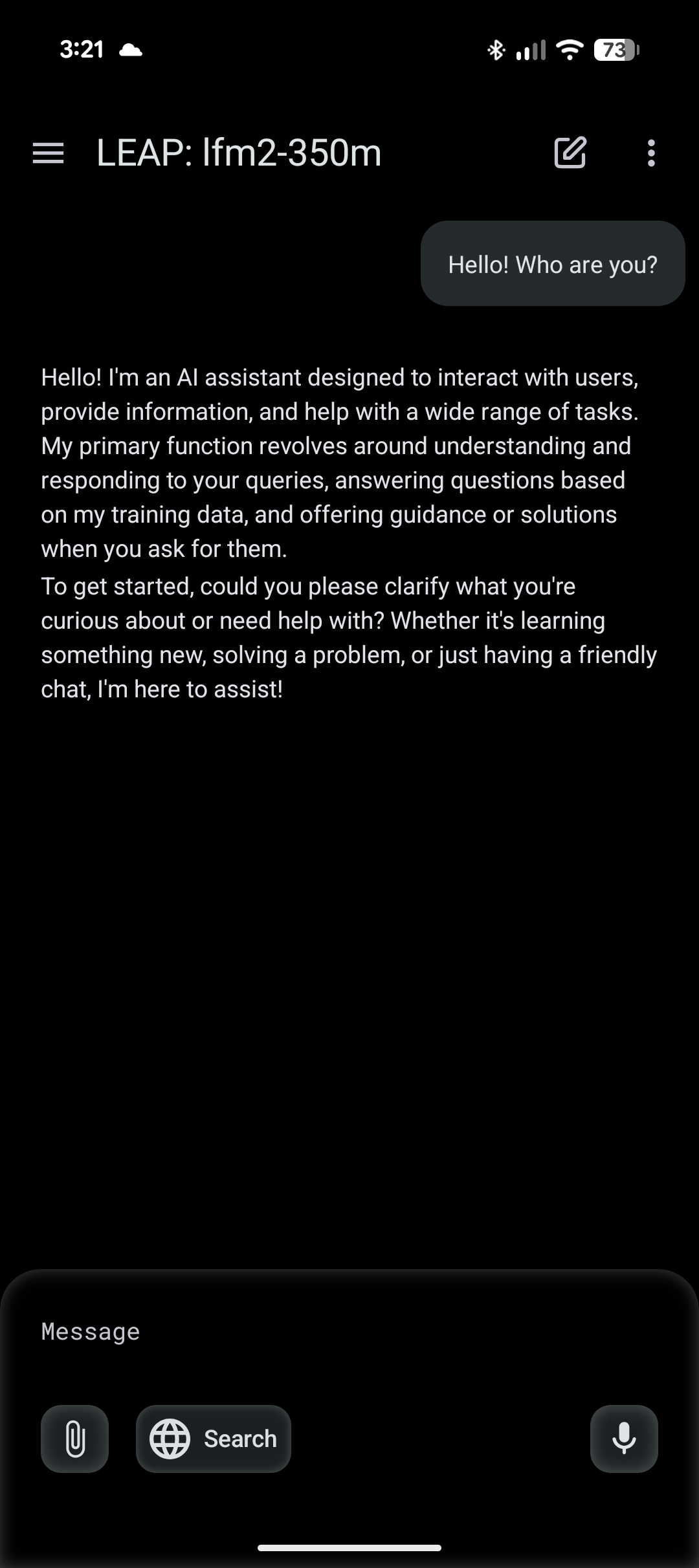

Apollo LEAP

Similarly, the LEAP models in Apollo seem to be a developer demonstration, aiming for adoption and integration.

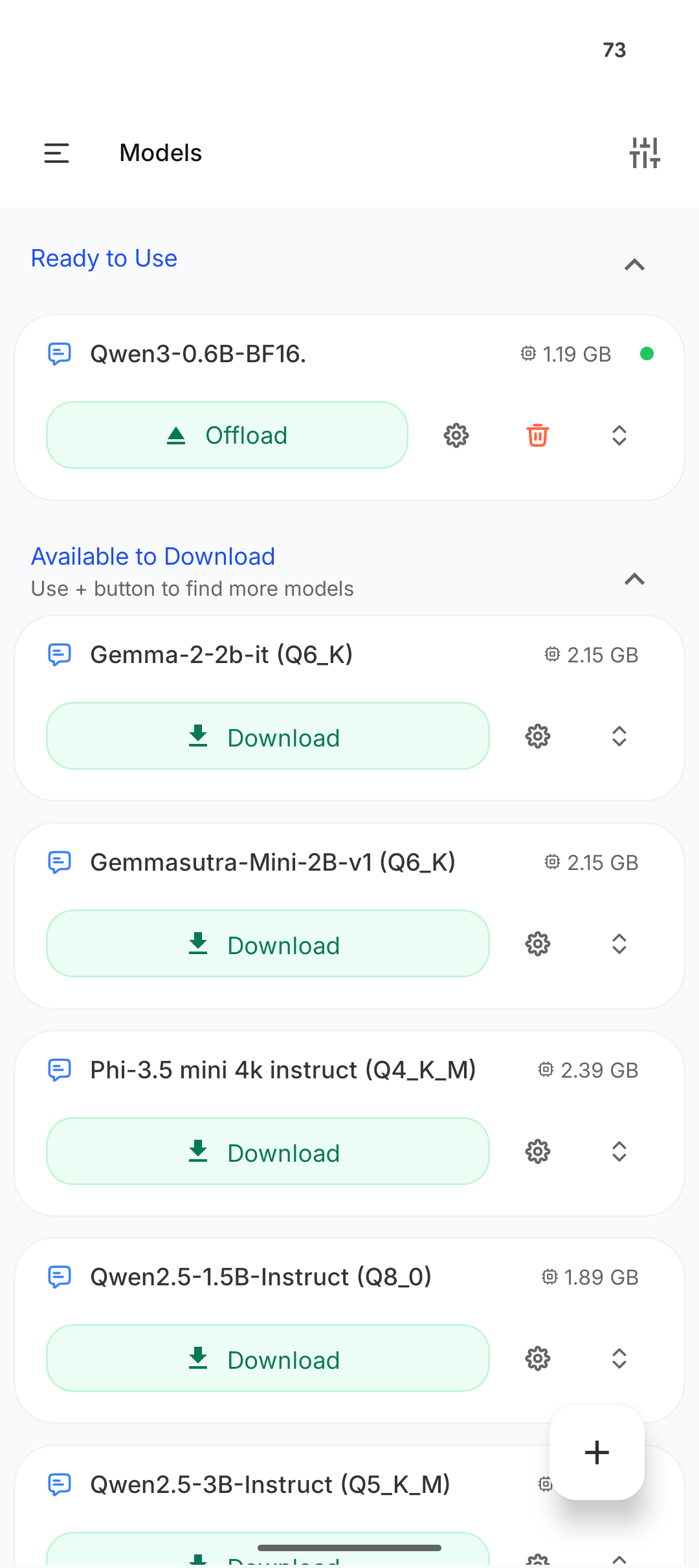

Pocketpal AI

Pocketpal seems to the leader of true on-device Chatbots, offering a wide-selection of free models.

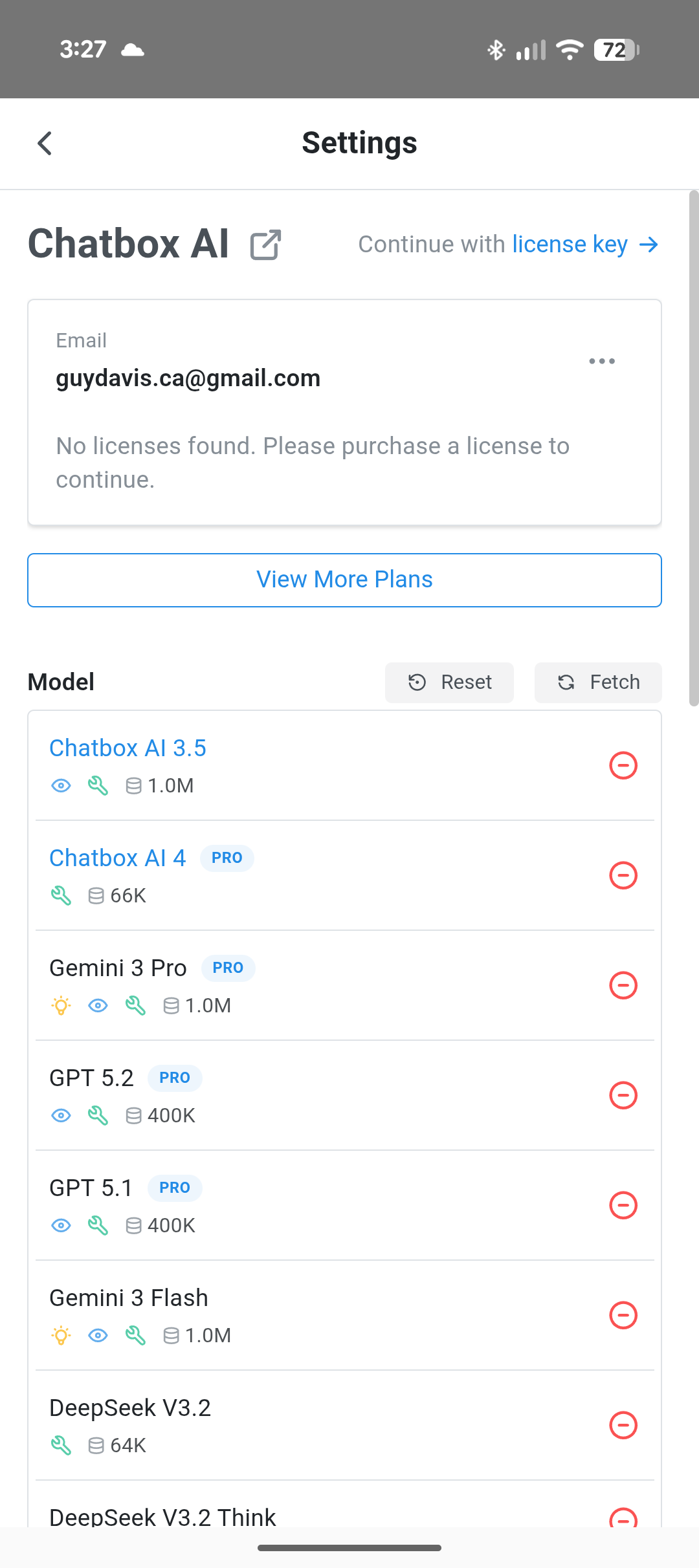

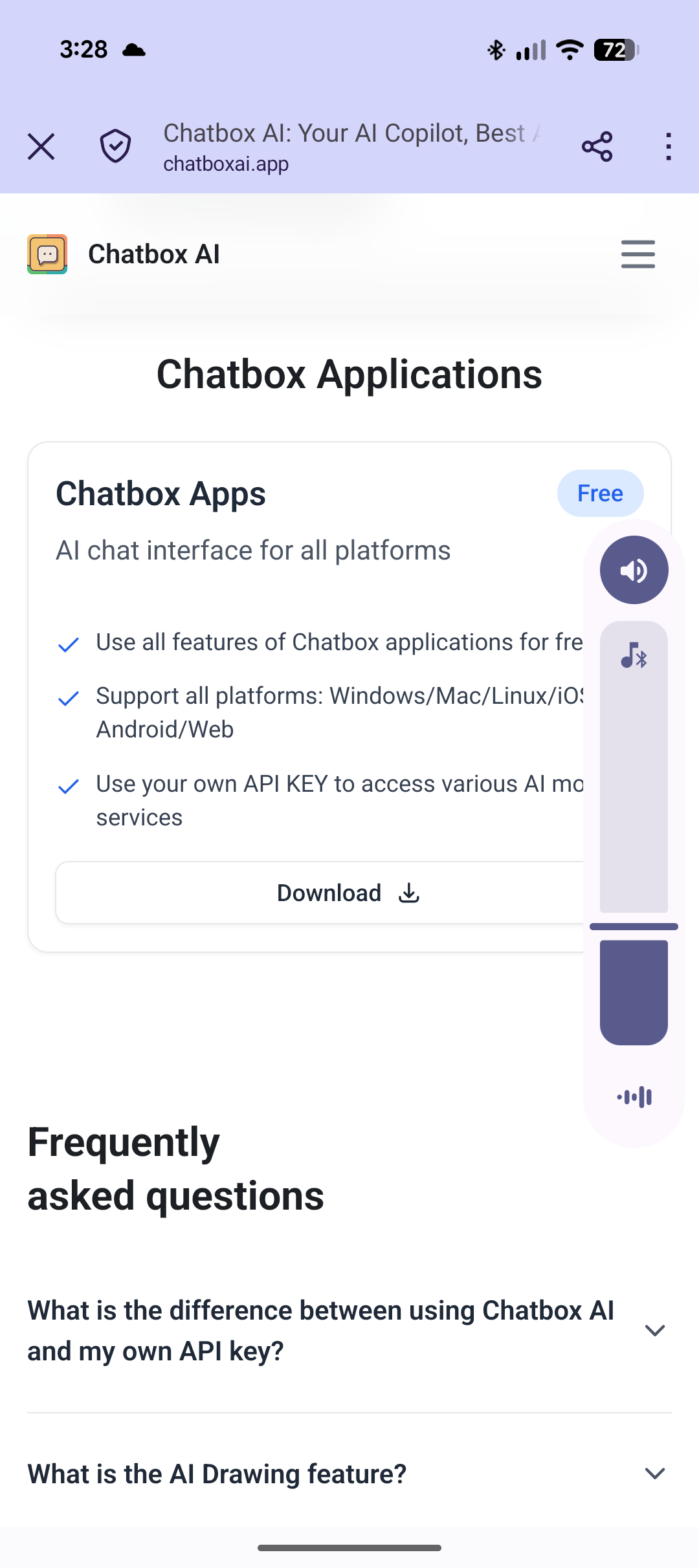

Chatbox AI

On the otherhand, ChatboxAI mentioned a “Free” version, but I couldn’t seem to get to it, instead only being shown license sales pages…

More in this series…

- LLMs on Android Apps accessing Cloud Services