This covers my work on the first lesson of the Fast AI course.

Relaunching Cloud GPU System

Following the FastAI guide on returning to work, I can easily relaunch the GCP instance created last month. Since it’s been awhile, I perform some basic updates.

Launching my Ubuntu shell on Windows 10, I can proxy the Juypter notebook to my local browser:

export ZONE='us-west2-b'

export INSTANCE_NAME='my-fastai-instance'

gcloud compute ssh --zone=$ZONE jupyter@$INSTANCE_NAME -- -L 8080:localhost:8080

cd tutorials/fastai/course-v3

git pull

sudo /opt/anaconda3/bin/conda install -c fastai fastai

FastAI - Lesson 1

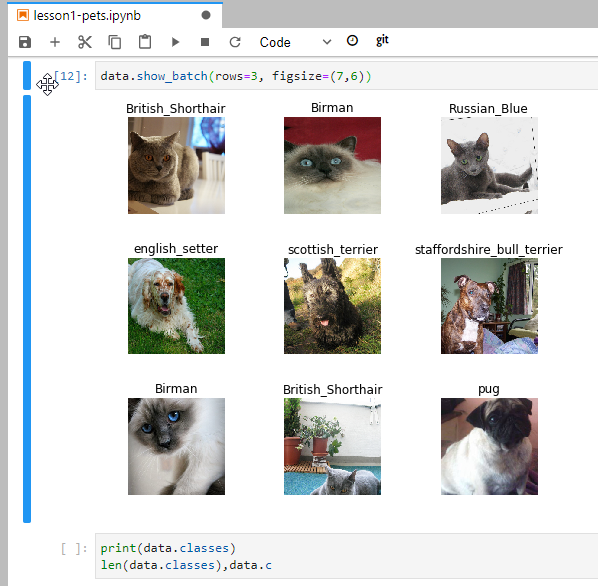

Getting started with the lessons, I was quite impressed by the power of dynamic Jupyter notebooks. The interactive browser view was great for quickly working with data and training models.

The code editor even offered code completion via keyboard shortcut. Very slick!

Classifier

The FastAI library made using the Resnet model quite simple. As the instructor mentioned, this model is still a very high-performing image classifier, even today.

Takeaways

This was a good introduction to transfer learning that trains a machine learning model using a labelled data set, in this case: cats vs dogs. The trained model can then be validated using a test set, measuring the accuracy via loss function. Really emphasized the importance of iteratively improving the model, based on measured accuracy.

Another interesting tidbit was the instructor’s discussion of GPU VRAM size for training these models. The GCP instance I’m using has a single Nvidia Tesla P4 GPU offering 8 GB GDDR5 memory. In the context of gaming GPUs these days, the range seems to be 6 GB in an NVidia RTX 2060 up to 16 GB in the AMD Radeon VII. Workstation cards such as the Nvidia Titan offer 24 GB, but are very expensive. Seems like there is a real opening here for an entry-level GPU that can be used for both gaming and hobbyist machine-learning.

Future

While this supervised approach to image classification is interesting, I wonder how well an unsupervised approach works. I’m guessing that the labelled training set provided to the supervised learner gives it a leg up over unsupervised alternatives.