After confirming that my old laptop was not a machine-learning powerhouse, I decided to return to Google Cloud Platform (GCP) to rent access to a GPU-powered server. I also wanted to see how GCP had evolved since I’d last used GKE in 2017. For this, I followed the Server setup tutorial at Fast AI.

GCloud CLI on Windows

Using the WSL to run a Ubuntu Bash terminal, you can install and configure the GCloud CLI:

# Create environment variable for correct distribution

export CLOUD_SDK_REPO="cloud-sdk-$(lsb_release -c -s)"

# Add the Cloud SDK distribution URI as a package source

echo "deb http://packages.cloud.google.com/apt $CLOUD_SDK_REPO main" | sudo tee -a /etc/apt/sources.list.d/google-cloud-sdk.list

# Import the Google Cloud Platform public key

curl https://packages.cloud.google.com/apt/doc/apt-key.gpg | sudo apt-key add -

# Update the package list and install the Cloud SDK

sudo apt-get update && sudo apt-get install google-cloud-sdk

# Then init gcloud, including login via browser for token

gcloud init

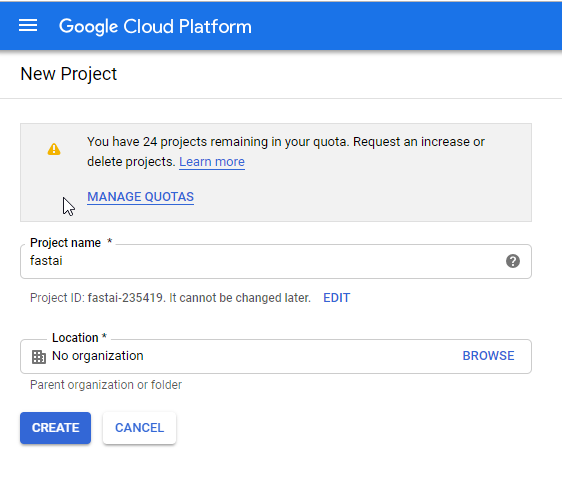

GCloud Project Creation

Creating project can be done via the CLI or GCP admin console as shown here:

Once the new project is created, one can select it in gcloud with:

gcloud config set project fastai-12345

Replace fastai-12345 above with your own project ID.

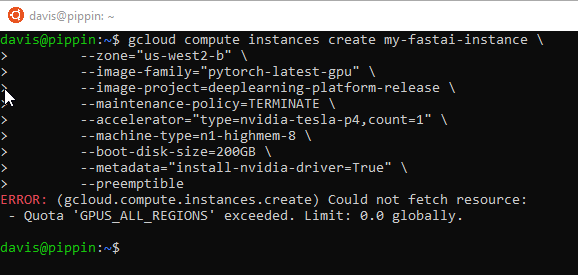

GPU Instance on GCP

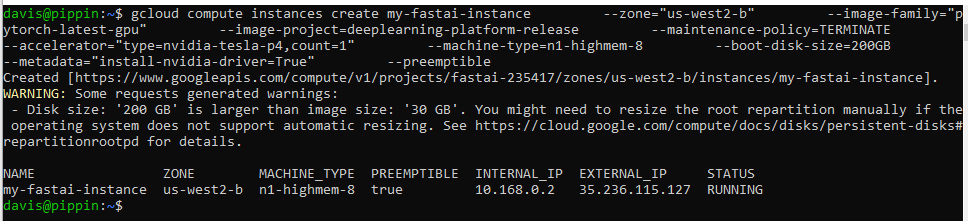

Creating an GPU-enabled instance in this new project to run ML notebooks can be done with:

gcloud compute instances create my-fastai-instance \

--zone="us-west2-b" \

--image-family="pytorch-latest-gpu" \

--image-project=deeplearning-platform-release \

--maintenance-policy=TERMINATE \

--accelerator="type=nvidia-tesla-p4,count=1" \

--machine-type=n1-highmem-8 \

--boot-disk-size=200GB \

--metadata="install-nvidia-driver=True" \

--preemptible

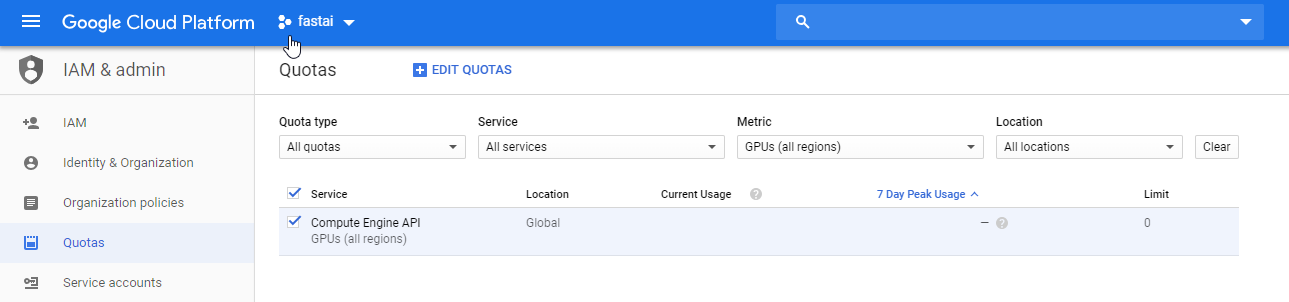

By default, GPU instances are limited to 0, so you’ll probably need to increase the limit to 1:

If so, you can request an increase in the GCP Console as shown by filtering for:

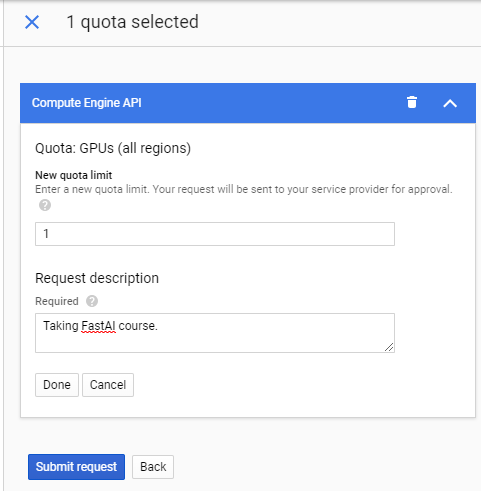

Then increasing on the right:

In my case, the request was approved via GCP Support in a few hours. I was then

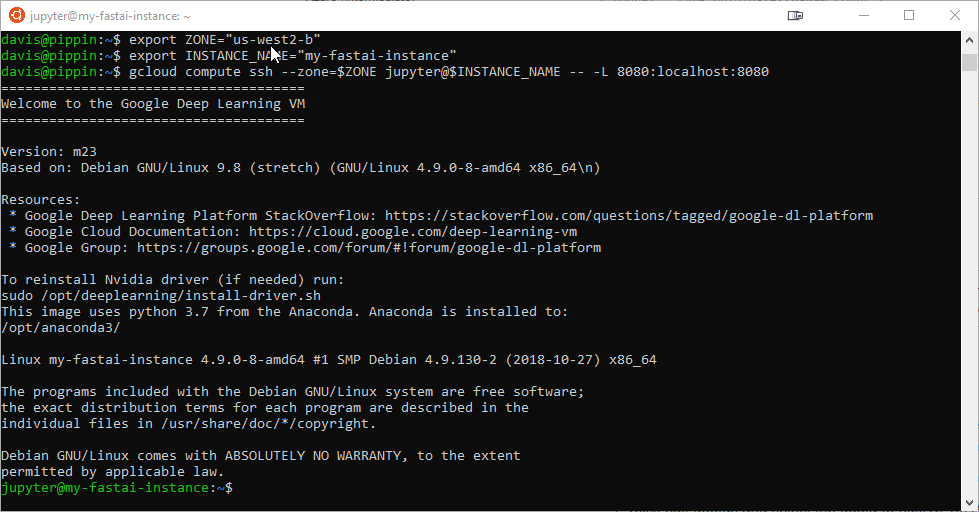

Once the instance is running, I can login (SSH keypair created if needed):

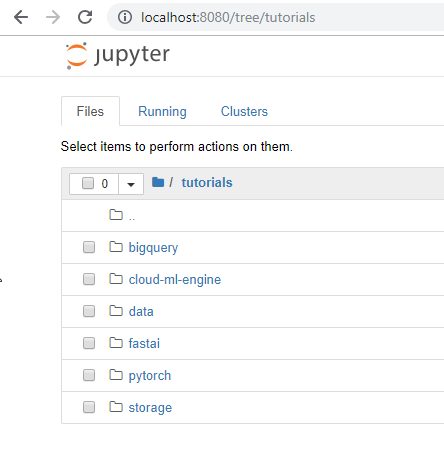

After a few pulling a few updates via git and conda, I was able to view the Jupyter Notebook in my browser, via the port forward to the GPU instance:

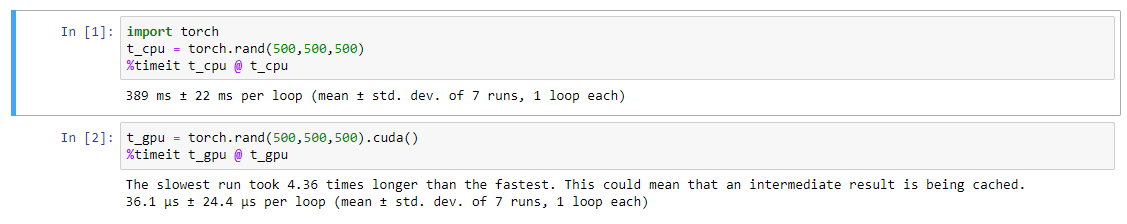

Pytorch CPU/GPU Test

Following my previous test on the laptop, I reran the CPU/GPU Pytorch test on this cloud instance:

Clearly, the CPU performance of this cloud instance (389 ms) destroys my old laptop (10.3 sec). As well, this GCP instance has does not error out when the CUDA GPU routines are tested. Even better, I can stop the instance when not in use. Very nice!