After bundling the lane detection algorithm into a Docker container, it’s now time to run against more dashcam footage. This post will cover deploying on GKE, Google’s managed Kubernetes service. The goal will be to process videos in parallel on multiple workers.

Create a Cluster

Following my earlier GKE post, I set up a fresh k8s cluster on GKE. In this case, the default set of three small VMs was adequate for basic testing.

gcloud container clusters create gke-lane-detect --num-nodes=3

Creating Persistent Storage

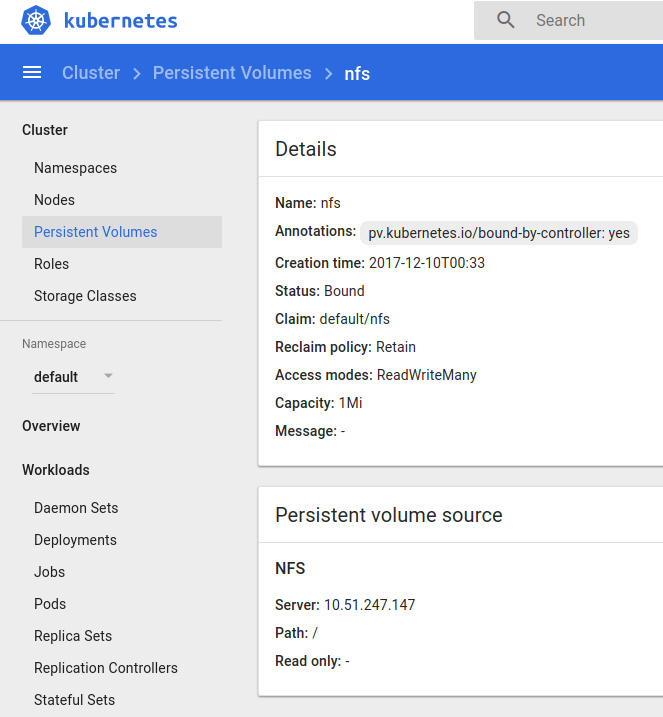

Given that my lane detection Docker container reads from and writes to a Docker volume, I faced a problem with GKE only supporting ReadWriteOnce persistent volumes. As an alternative, I was able to deploy an NFS server in the k8s cluster, providing shared read/write storage to all workers.

gcloud compute disks create --size=200GB gke-nfs-disk

With the GCE disk created, the next step was to create a PersistentVolume and PersistentVolumeClaim within the k8s cluster.

apiVersion: v1

kind: PersistentVolume

metadata:

name: nfs

spec:

capacity:

storage: 1Mi

accessModes:

- ReadWriteMany

nfs:

server: NFS_IP

path: "/"

Most importanly, this PV is of type NFS with access mode ReadWriteMany, unlike a plain old GCE disk mounted in GKE which is only of type ReadWriteOnce.

This will allow multiple k8s workers to write to the NFS share at the same time as they split up processing of the dashcam video files.

Transferring Video Data

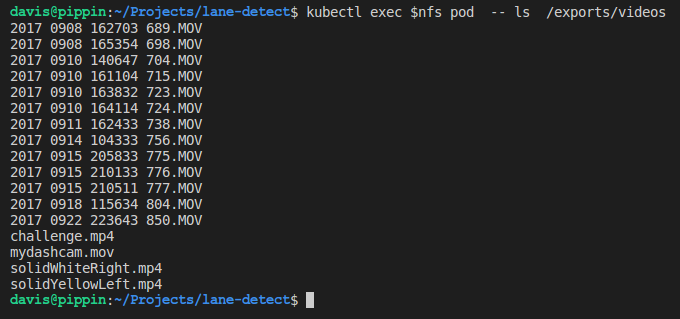

With the NFS service started, I copied up about 4 GB of sample dashcam footage from my laptop to the NFS pod:

nfs_pod=$(kubectl get pods | grep nfs-server | cut -d ' ' -f 1)

kubectl cp ./videos $nfs_pod:/exports/videos

Using kubectl exec, I checked on the uploaded videos:

Creating Kubernetes Jobs

With the dashcam footage ready on the NFS server in the k8s cluster, the next step was to create a set of lane detection workers as seen in this k8s resource spec:

apiVersion: batch/v1

kind: Job

metadata:

name: lane-detect

spec:

parallelism: 3

template:

metadata:

name: lane-detect

spec:

containers:

- name: lane-detect

image: guydavis/lane-detect

workingDir: /mnt

command: [ "/usr/bin/python3", "-u", "/opt/lane_detect.py" ]

args: [ "videos/" ]

imagePullPolicy: Always

volumeMounts:

- name: my-pvc-nfs

mountPath: "/mnt"

restartPolicy: Never

volumes:

- name: my-pvc-nfs

persistentVolumeClaim:

claimName: nfs

This job worked on the mounted videos directory, processing dashcam files in a random order, skipping over those already processed by other workers. With parallelism set to 3, all workers in the Kubernetes cluster are involved, reaching completion much faster than a standalone system.

Conclusions

Deploying this batch workload on Kubernetes was an interesting exercise that demonstrated the benefits of parallel execution. With the easy scalability of GKE, this is a promising avenue for future performance gains.

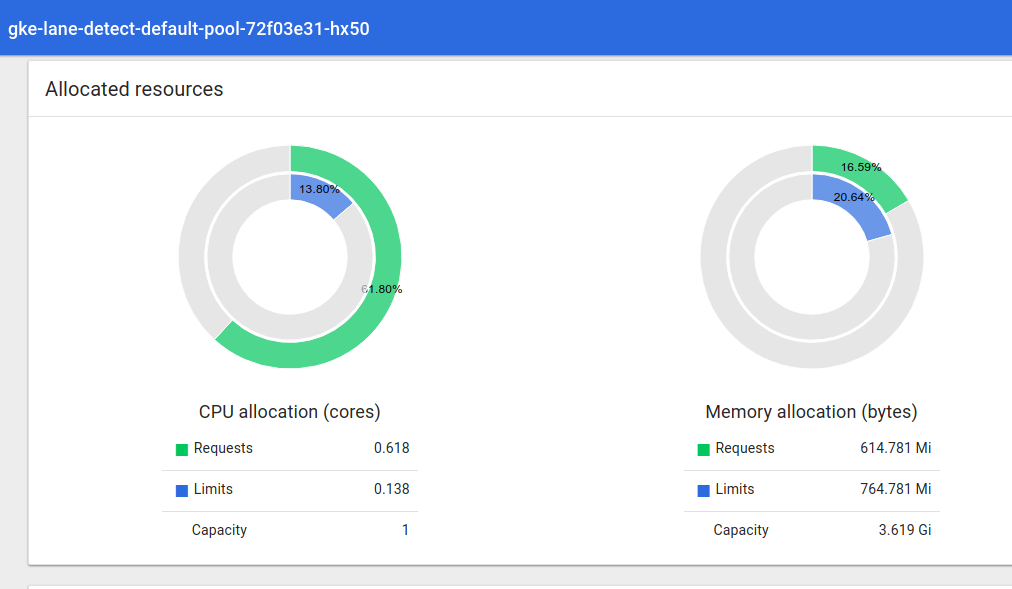

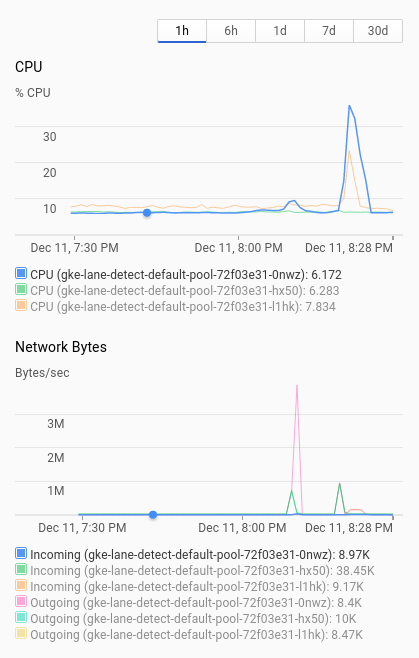

I found both the GKE (above) and GCP (below) admin consoles handy for monitoring CPU usage as processing occurred:

And finally, here’s a short example of processed dashcam footage:

Next Steps

While this approach demonstrated lane detection using Kubernetes to scale, there are a number of places for further improvements:

- Obviously, improving the algorithm and its Python implementation could yield the biggest gains.

- Next would be the NFS-based data pipeline, which may become a bottleneck with higher numbers of workers. Alternatives such as GlusterFS and HDFS may be promising.

- Finally, once the improvements above have already been made, one can scale up the k8s cluster to throw more compute resources at the problem.

More in this series…

- Lane Detection in Images - first attempt.

- Improved Lane Detection - improved approach.

- Handling Dashcam Footage - processing video.

- Deploying in Docker - bundling as a Docker image.

- Running on Microsoft Cloud - scaling on Azure.