After I completed a 5-day course last month covering Google’s current AI offerings, I wanted to play around in the Google AI Studio a bit more. In particular, I wanted to check out the Gemini LLM via some prompts. Initially, I used the Gemini v1.5 Flash model.

Gemini v1.5 Sample Prompts

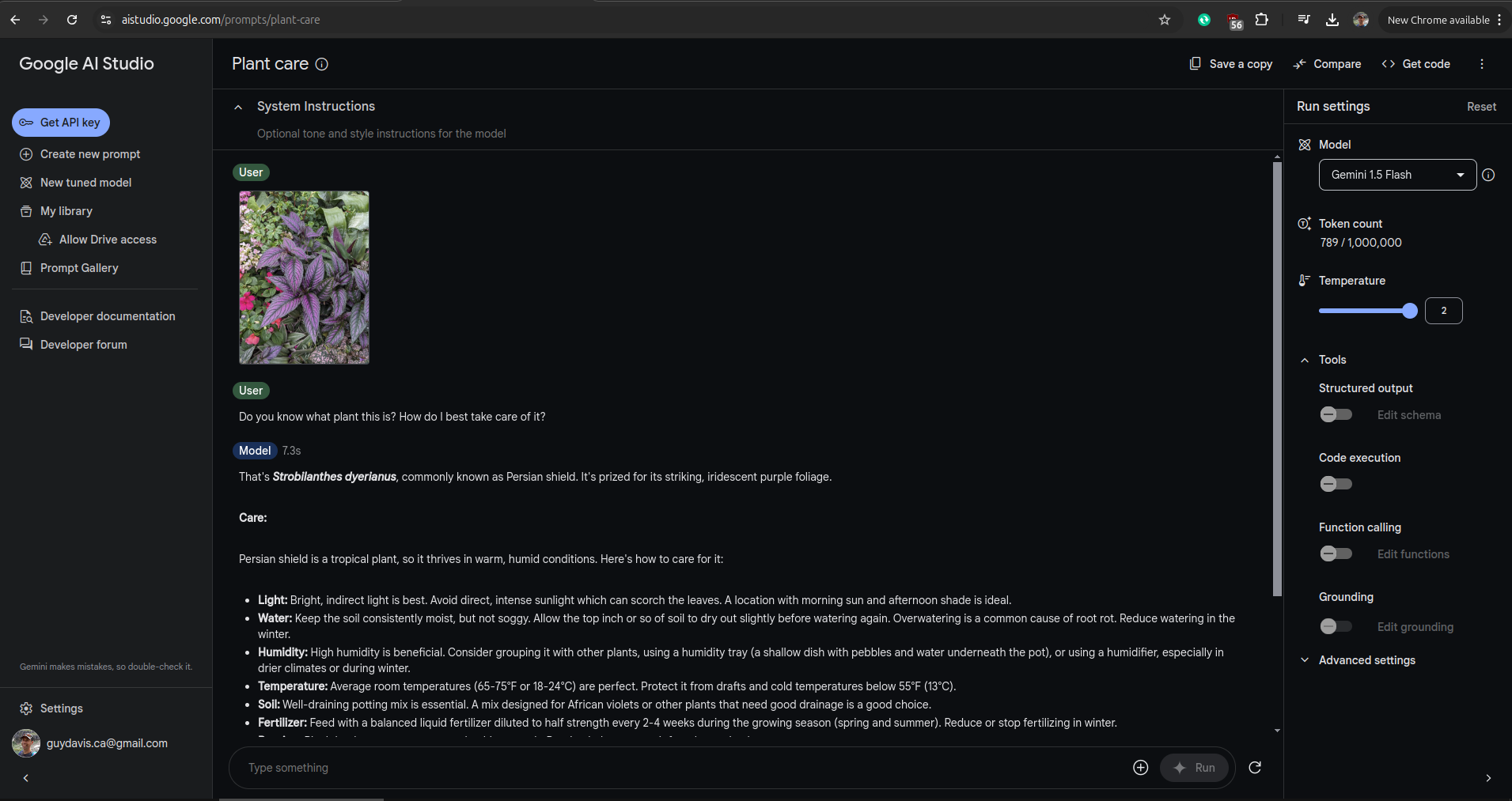

Here’s an example prompt in the Studio, asking about plantcare based on a photo input.

Along the right side of the studio window, the model’s inputs can be tweaked. For example, the creativity of the response is affected by changing the ‘Temperature’ value.

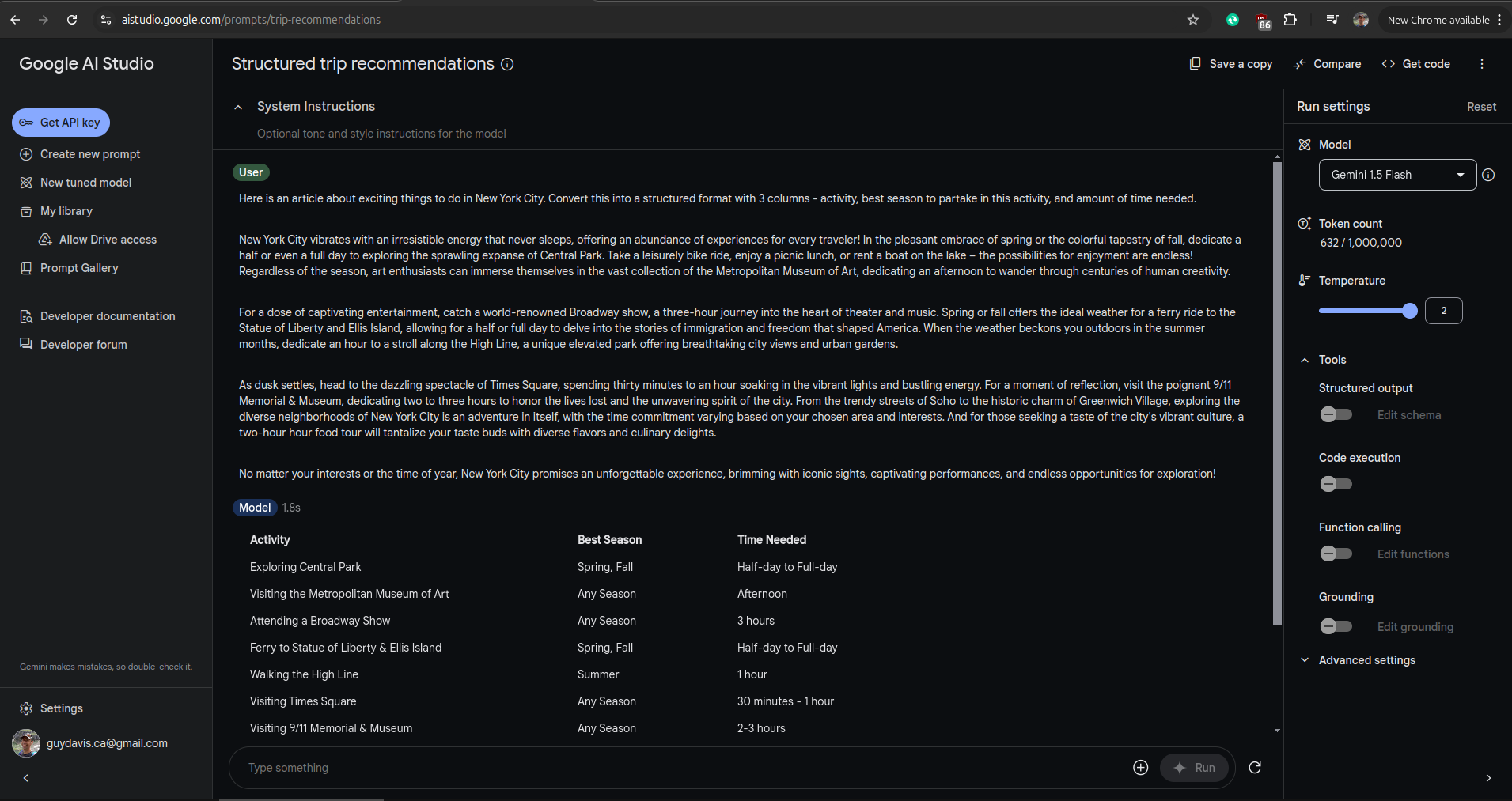

The Prompt Gallery had some other interesting prompts, including one showing structured text output:

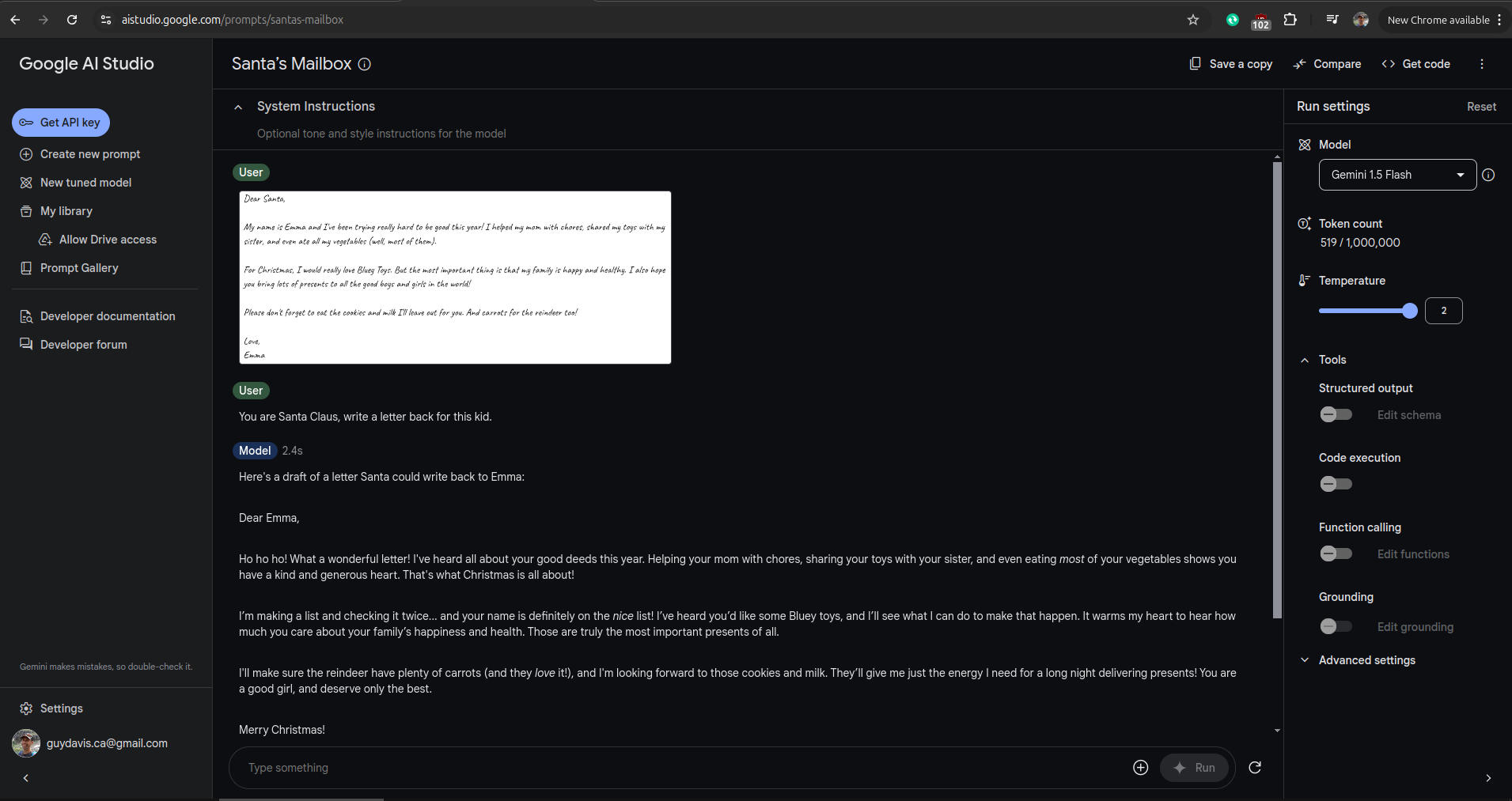

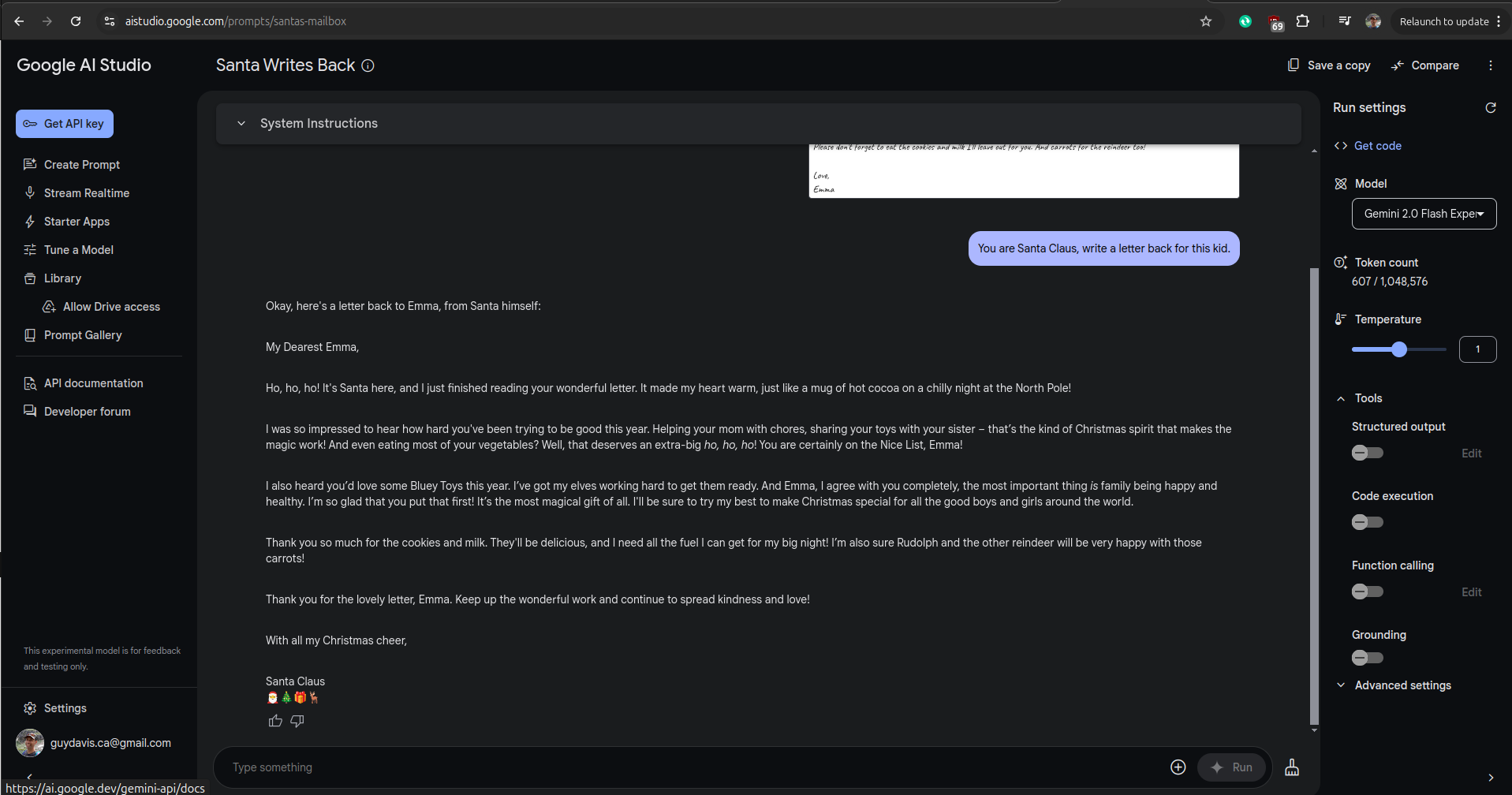

After my tests of OCR a few years ago, I found the pasted letter image, responded to by Santa Claus an impressive feat:

Gemini v2.0 Sample Prompts

A few days after I posted above using Gemini v1.5, Google annouced Gemini v2.0 and made it available to test within Google AI Studio. So, I went back and tried out the same sample prompts.

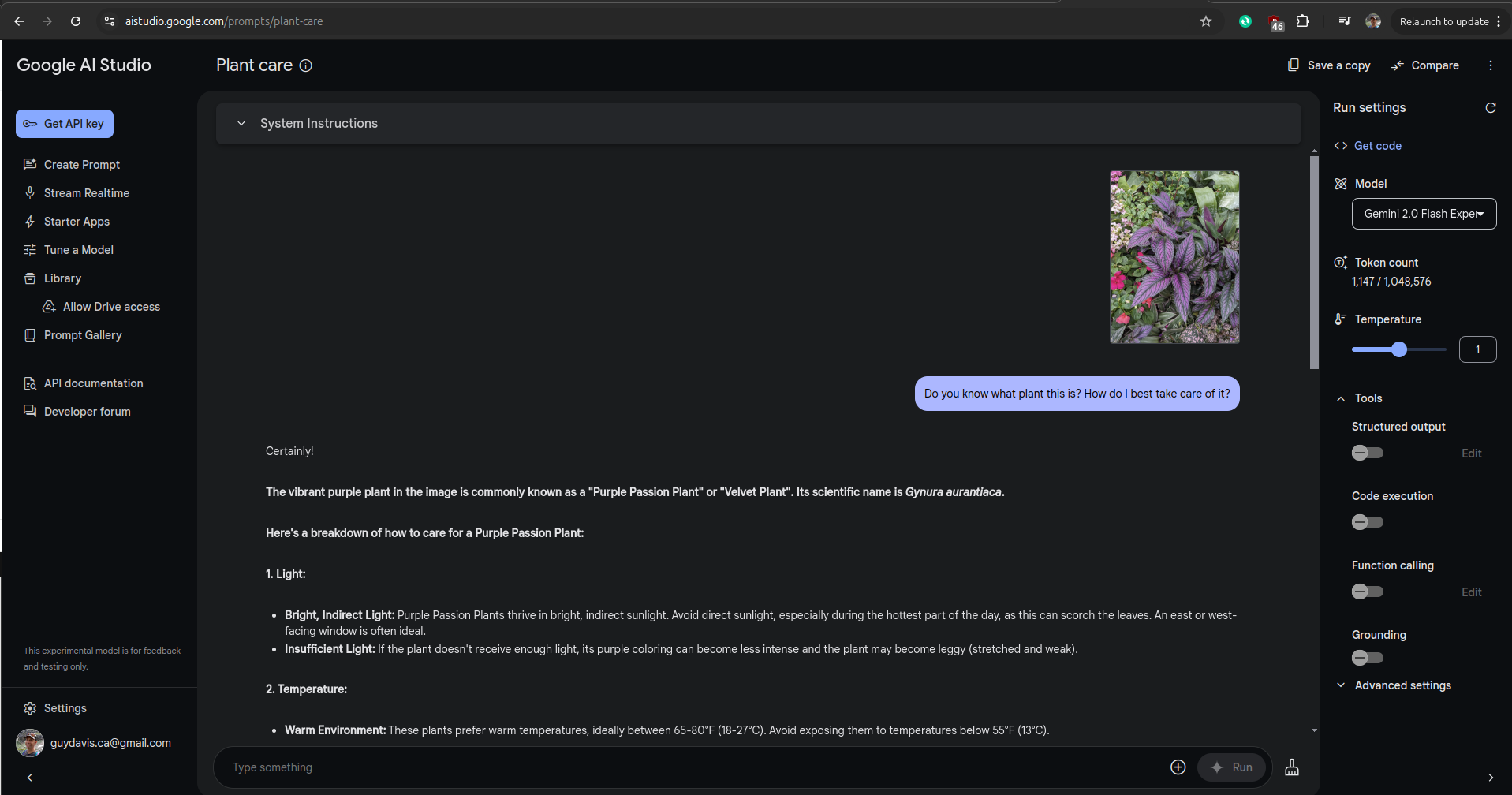

Interestingly, using the Gemini 2.0 Flash experimental model, providing the same input photo and prompt as before.

I was told it was a different plant than Gemini v1.5 labelled. As I am not a botanist or florist, I actually don’t know which (if either) model labelling is correct. This is a good example of non-experts being convinced of LLM output, even when it may be plain wrong.

Gemini v1.5 and v2.0 both handled the structured output task for trip recommendations nearly identically.

The letter from Santa to Emma, seemed to have a little more heart using the Gemini v2.0 model:

P.S. For those interested, the 5-day course I took is now available as a Learning Guide so don’t feel left out if you missed it first time.

More in this series…

- Google Gemini - Google Gemini

- Anthropic Claude - Anthropic Claude

- Llama 3 - Llama 3

- ChatGPT 4o - ChatGPT 4o

- Anthropic Claude in Canada - Claude eh?

- LLMs on Android - AI in your pocket

- Google Imagen3 - AI Image Generation

- Azure AI Studio - AI on MS Azure

- Google Gemma - Google Gemma v3 released.